Loukas Konstantinou will be presenting a paper at CHI’25 on a scoping review of behavior change interventions against online misinformation.

- Konstantinou, L. and Karapanos, E. (2025) Behavior Change Interventions Combating Online Misinformation: A Scoping Review. In CHI Conference on Human Factors in Computing Systems (CHI ’25), April 26-May 1, 2025, Yokohama, Japan. ACM, New York, NY, USA, 19 pages.

As part of his PhD studies, Loukas inquired into existing behavioral interventions and asked:

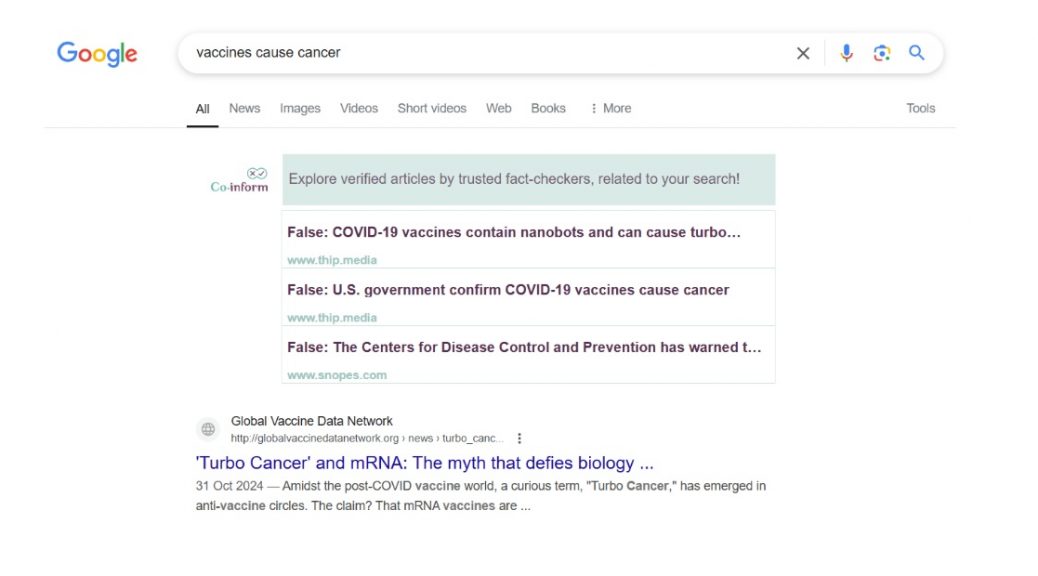

- Which behavioral objectives were the interventions designed to pursue? He identified 17 distinct objectives, such as instilling reflective content composition, or increasing exposure to fact-checking, and classified them into three stages of the “News Process” cycle: composition, amplification and consumption. We believe that this framework of objectives and stages can guide intervention design during its early steps, as it may structure the discussion around the intervention scope, and the means to achieve it. Moreover, we found the majority of interventions to target misinformation during the consumption stage (47%), followed by amplification (29%) and composition (24%), highlighting the need for further exploration of behavioral interventions targeting the earlier stages of the news cycle.

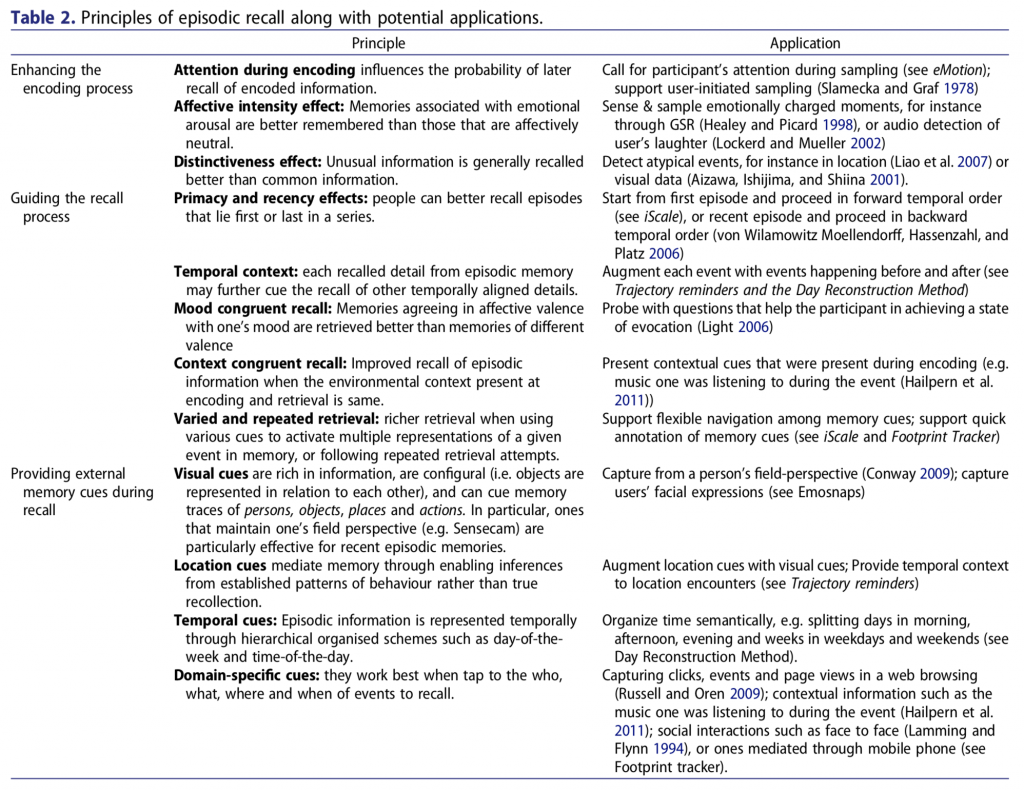

- Which theories of attitude or behavior change were interventions grounded upon? He identified 24 theoretical frameworks employed in the designing of interventions and classified them into five broad categories: theories of persuasion, decision-making, motivation, socially situated theories, and finally, descriptive theories & models.

- How prevalent is stakeholder involvement in the design of interventions? The design of behavioral interventions is approached by many disciplines, with each discipline bringing unique strengths to intervention design. For instance, the Behavioral Sciences emphasize theory and evidence-based intervention design, while HCI emphasizes the need for iterative design and evaluation cycles. Loukas identified three types of design approaches, ones rooted in user-centered design methodologies, ones rooted in participatory design, and ones rooted in behavioral science frameworks. We were surprised to find that more than half of the projects engaged in some form of parallel design. Approximately 70% of the projects involved stakeholders during the design of interventions, however in the vast majority of the cases, these were experts such as journalists and media experts, and only 2 out 10 of the interventions were developed with the help of end-users.

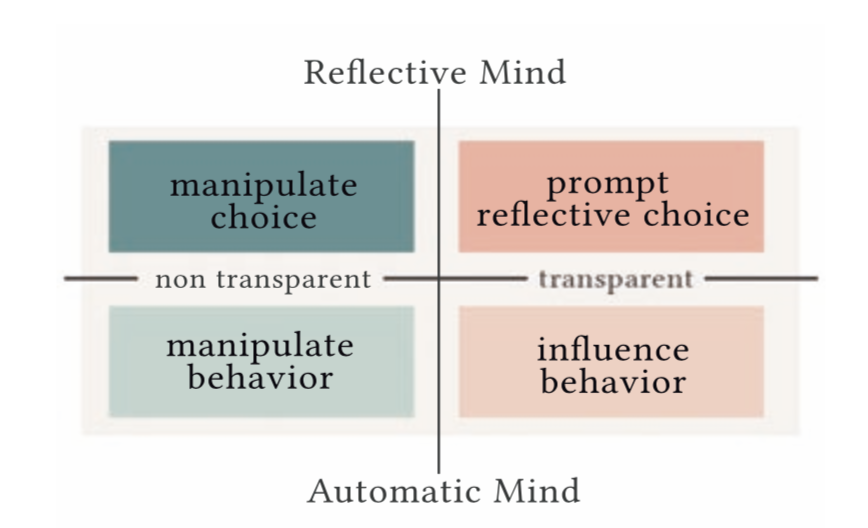

- To what extent were interventions evaluated to assess their behavioral or attitudinal effects? Our prior work on the the use of nudging in HCI literature had revealed a rather problematic picture, with 65% of the empirical studies of nudging to have a duration of a day or less, only 19% of the studies to last over a month, and only 14% of the studies to inquire into whether the effects of the nudge hold after its removal. Loukas’ review revealed a more positive picture. About 4 in 10 of the interventions were evaluated through a field trial, lasting from weeks (39%), to months (56%), or even more than a year (6%). About half of the interventions were compared against a control condition, while another 37% of the interventions were evaluated through a multiple-arm design, comparing two or more variations of the intervention. The median sample size was 264 with only 13% of the interventions studied with a sample of less than 100 participants. All combined paint a promising picture, highlighting a growing recognition of the importance of ecological validity.

- While this work did not aim, neither could allow for a systematic inquiry into interventions’ efficacy, given the small and divergent sample of interventions in terms of their behavioral objectives, techniques, topics and participants, we found that almost half of the interventions in our sample either failed or partially failed to produce a significant effect on their primary outcomes. In line with Klasnja et al., this highlights the importance of measuring intermediate outcomes. We found only 20% of the studies to include an inquiry of interventions’ impact on an intermediate outcome. Such inquiries are critical in HCI research as they contribute to an understanding of which behavior change techniques work, when, and for whom, and can shed light on the dynamic, sequential nature of misinformation and its mitigation, from one’s initial exposure to the development of misperception, and the formation of attitudes and subsequent behaviors.

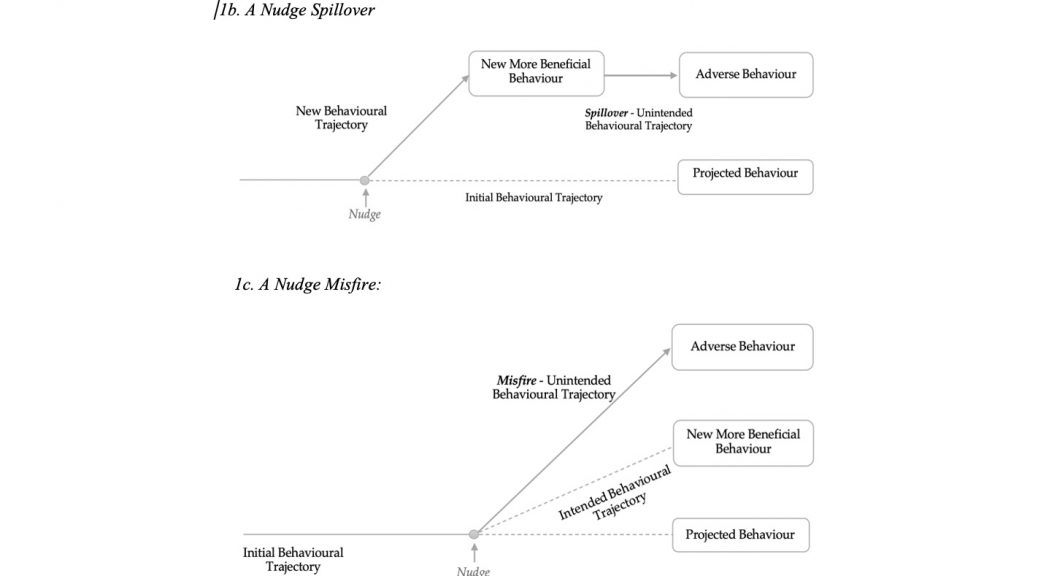

- What are the reasons for interventions’ failure? Building on prior taxonomies by Sunstein (2017) and our own work (Caraban et al., 2019), Loukas identified nine reasons of interventions’ failure, such as geo-cultural factors, the lack of educational effects, behavioral or attitudinal effects not sustaining over time, or unexpected effects and backfiring, among others. Interestingly, only a few researchers explored ways to counter such behavioral failures.

Lastly, to assist researchers and practitioners in the design and development of behavioral interventions against misinformation, Loukas synthesized some of the findings into a set of design cards, the Behavioral Responses to Misinformation cards.